How to Select a Journal for Publication: A Comprehensive Guide for Researchers

Journal selection is often done at the last moment and without much thought or strategy. This article explains why this is a mistake. Here, we...

A good journal impact factor (IF) is often the main consideration for researchers when they’re looking for a place to publish their work. Many researchers assume that a high impact factor indicates a more prestigious journal. And that means more recognition for the manuscript author(s).

So, by that logic, the higher the impact factor, the better the journal, right?

Well, it’s not that simple.

In principle, a higher IF is better than a lower IF, but there are many conditions, variations, and other issues to consider.

There’s no single determinant of what makes a good journal impact factor. It depends on the field of research, and what you mean by “good.” What is “good” for a breakthrough immunology study may not apply as “good” for an incremental regional economics study.

Using impact factors in the academic world to rank journals remains controversial. The San Francisco Declaration on Research Assessment (DORA), for example, tried to tackle the issue of over-reliance on journal IFs when evaluating published research.

Yet researchers continue to associate a good IF with better quality research. So, until DORA or others develop a better solution, we’re stuck with the IF, simplistic as it may be.

Read on to increase your understanding of impact factors and learn what’s a good one for your research.

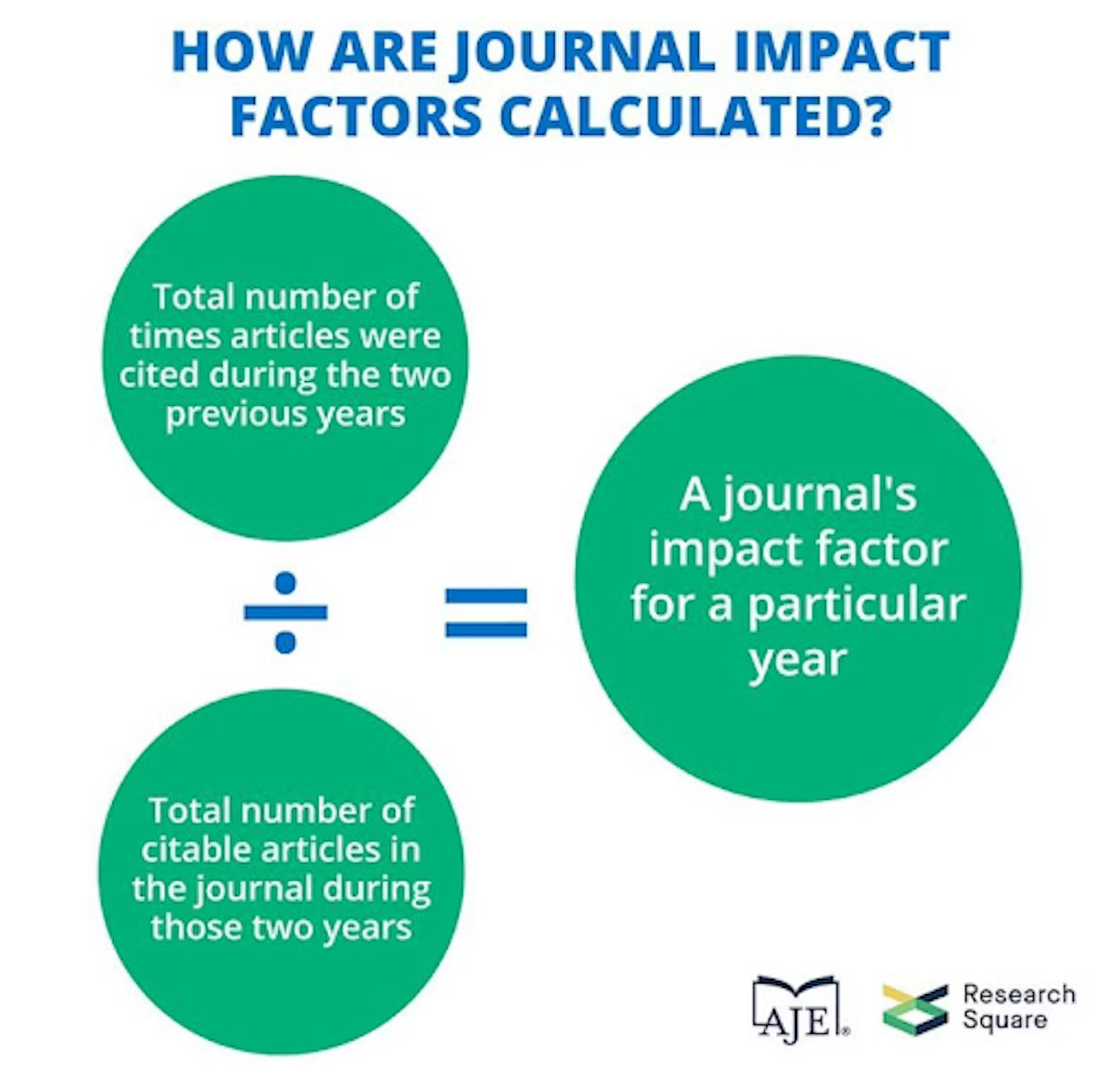

A journal impact factor is a metric that assesses the citation rate of articles published in a particular journal over a specific time – that’s usually 2 years (see below).

For example, an IF of 3 means that published articles have been cited on average 3 times during the previous 2 years.

The IF for a particular year is calculated as the ratio of the total times the journal’s articles were cited in the previous 2 years to the total citable items it published in those 2 years.

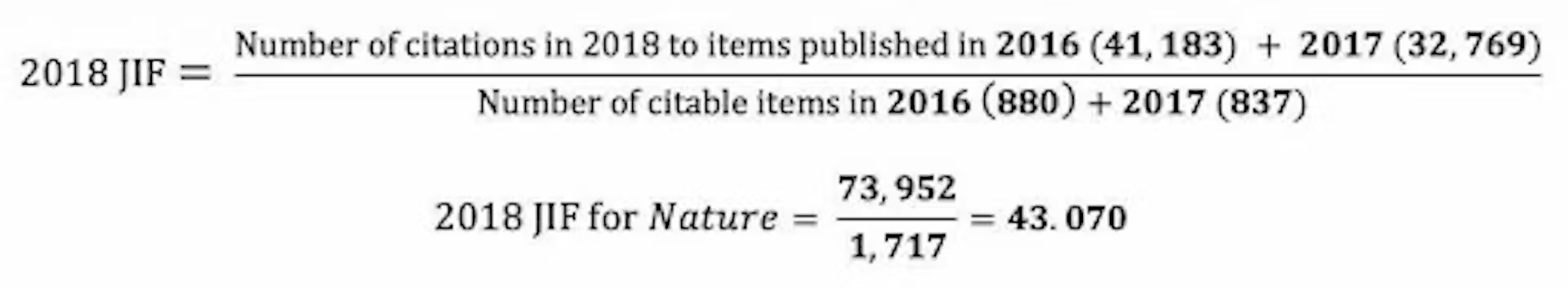

For example, in 2018, Nature had an IF of 43.070. That's a good journal impact factor. This is calculated as follows:

(Adapted from https://clarivate.libguides.com/jcr)

.avif)

(Source: 2018 Journal Citation Reports)

Clarivate Analytics annually computes IFs for journals indexed in Web of Science. These scores are then collectively published in the Journal Citation Reports (JCR) database.

Clarivate publishes two different JCR databases every year. The Science Citation Index (SCI) is for the STEM (science, technology, engineering, and mathematics) disciplines, and the Social Science Citation Index (SSCI) is for, you guessed it, the social sciences. These are the only acceptable and reputable sources for impact factors out there: If your journal is using another index, then beware – it could be predatory.

In addition to the 2-year impact factor, Clarivate offers metrics for short-, medium-, and long-term analysis of a JCR journal’s performance. These metrics include:

Journal ranking within a specific subject category can also be indicated by quartiles. Many universities around the world prefer the use of these metrics rather than raw IF for selecting journals. Four quartiles rank journals from highest to lowest based on the impact factor: Q1, Q2, Q3, and Q4.

Q1 comprises the most (statistically) prestigious journals within the subject category; i.e., the top 25% of the journals on the list. Q2 journals fall in the 25%–50% group, Q3 journals in the 50%–75% group, and finally, Q4 in the 75%–100% group.

Numbers = status, and many authors, or their institutions, insist on publication in a Q1 or at least a Q2 journal.

An alternative ranking system, and one that is free to access, Scimago Journal & Country Rank, also uses quartiles. Be sure not to confuse the two.

As mentioned, separate JCR databases are published for STEM and social sciences.

The main reason is that there are wide discrepancies in impact factor scores across different research fields. Some of the likely causes for these discrepancies are:

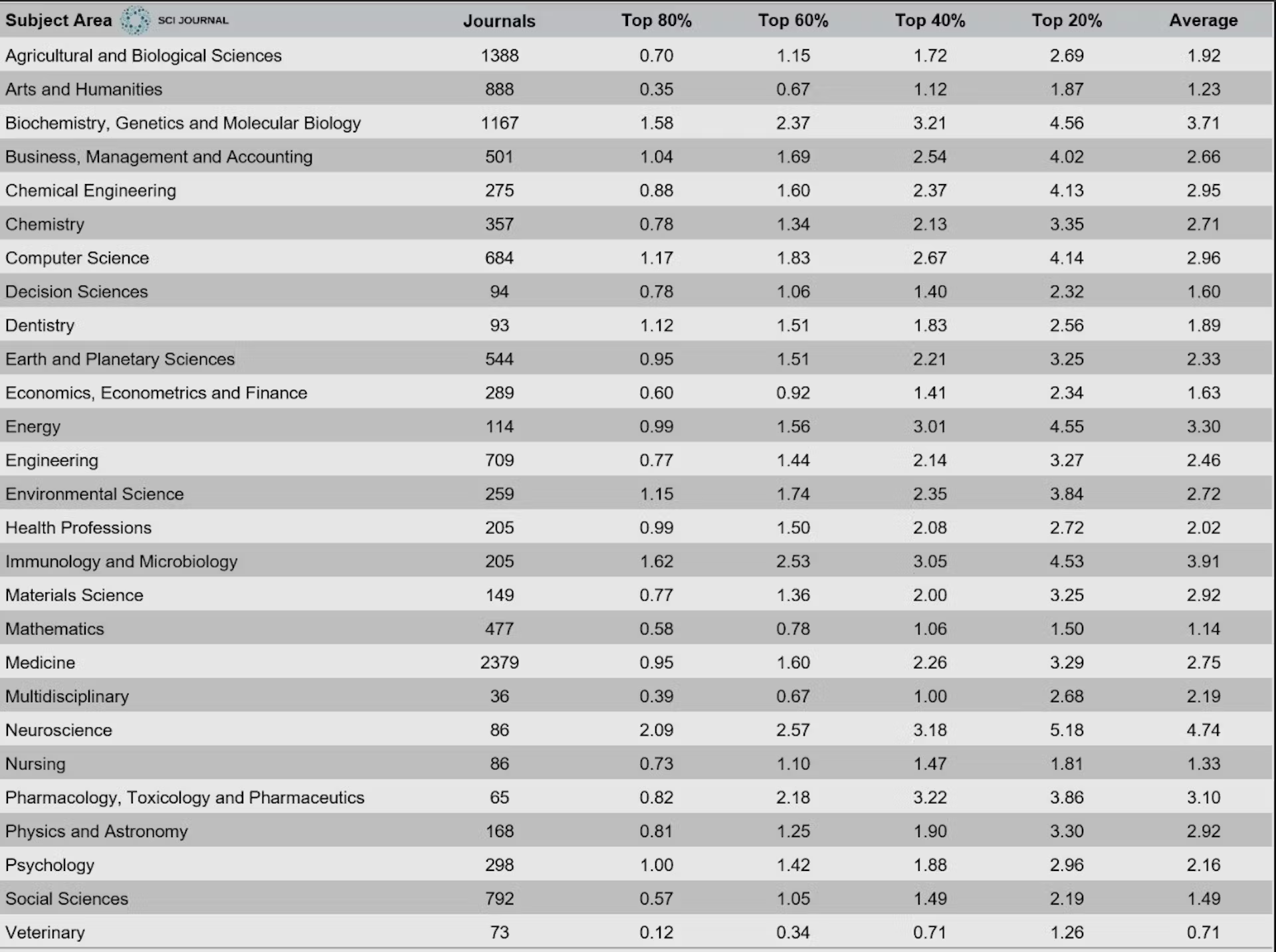

To put things into perspective, data prepared by SCI Journal for the 2018/2019 journal impact factor rankings are shown in this table.

Source: scijournal.org

The table illustrates where a journal subject area ranks in the four classes: top 80%, top 60%, top 40%, and top 20%. Outliers were removed for the sake of cleaner data.

The data demonstrate how journals for subject areas show a range of impact factors.

Large fields, such as the life sciences, generally have higher IFs. They get cited more, so that makes sense. For example, it's natural that a study on a vaccine breakthrough will lead to more citations than a study on community development.

This obviously skews the use of impact factors when assessing researchers across a university. We can’t all keep up with the biologists!

For example, the top-ranked journal by Clarivate in 2019 was CA: A Cancer Journal for Clinicians, which had a remarkably high IF of 292.278. The New England Journal of Medicine, which has long been a prestigious journal, came in second with a high IF of 74.699. Those are amazingly good journal impact factors.

Conversely, reputable journals in smaller fields, such as mathematics, tend to have lower impact factors than the natural sciences.

For example, the 2019 JCR impact factors for respectable mathematical journals such as Inventiones Mathematicae and Duke Mathematical Journal were 2.986 and 2.194, respectively.

As for the social sciences, you could simply argue they’re “less popular” than the natural sciences. So, their reputable journals also tend to have lower impact factors.

For example, well-regarded journals such as the American Journal of Sociology and the British Journal of Sociology had JCR 2019 impact factors of 3.232 and 2.908, respectively. Those are good impact factors for the social sciences but would look rather low for STEM unless it was a regional or niche topic.

Therefore, what may be seen as an excellent impact factor in mathematics and the social sciences may be viewed as way below average in the life sciences.

That’s not to say the social sciences are less important. It just means they’re comparably researched and cited less.

The comparison doesn’t end there. Another aspect that shouldn’t be overlooked is the research subfield. Journals in, say, a physics subfield such as astronomy have a different impact factor than journals in fluid dynamics.

For example, two well-known astrophysics journals, MNRAS and Astrophysical Journal, had JCR 2019 IFs of 5.356 and 5.745, respectively. Meanwhile, two respectable journals in fluid dynamics, Journal of Fluid Mechanics and Physics of Fluids had JCR 2019 IFs of 3.354 and 3.514, respectively.

They may not be in The New England Journal of Medicine territory, but all are reputable journals.

You have to look at the bigger picture here because there’s a lot more to consider than the single numeric representation.

If you’re in a field/subfield with high-impact-factor journals, it’s only logical that the cutoff for a good IF will also be high. And, of course, it’ll be lower for a field/subfield with lower impact factor journals.

Impact factor statistics should, thus, be interpreted relatively and with caution, because the scores represented are not absolute.

A good impact factor is either, in short, what your institution or you say it is. Otherwise, it’s one that's sufficient to connote prestige while still being a good forum for your research to be read and cited.

Let’s look at a few of the other factors, apart from IF, to help you choose your target journal.

The impact factor was used initially to rank journals, which will then help you decide on which one to which to submit your research. Some of the pros of an IF include:

Despite its popularity, the impact factor is clearly a flawed metric, and its use to judge if a journal is good is criticized. That’s what we’ve seen with DORA, among plenty of others.

In addition to the previously mentioned shortcoming of not being able to use the impact factor for comparing journals across fields, other cons include:

The criticism is nothing new (see Kurmis, 2003, among others). But we’ve got to live with it until there’s something better.

On a personal note, we’re rather tired of seeing the great stress of publishing in a Q1 journal that’s placed on researchers. Especially those from certain economies that pressure their researchers to publish when they’d be better off fostering good, reflective, valuable research.

So here are some other factors with impact, even if they’re not impact factors.

A good impact factor may be a requirement by your institution. But it shouldn’t be the only aspect you consider when choosing where to publish your manuscript.

Another key factor is whether the work to be published fits within the aims and scope of the journal.

You can determine this by analyzing the journal’s subjects covered, types of articles published, and peer-review process. Some very targeted journals would welcome your research with open arms.

An additional factor to consider is the target audience. Who is likely to read and cite the article? Where do these researchers publish? This can facilitate the shortlisting of some journals.

Other tips for choosing a journal include:

Well-known publishers like Springer and Elsevier also list factors for choosing a journal.

Scimago Journal Rank (SJR), as mentioned above, is a useful portal that scores and ranks journals, which are indexed in Elsevier’s Scopus database, based on citation data.

The SJR indicator (PDF) not only measures the citations received from a journal but also the importance or prestige of the journal where these citations come from. It can be used to view journal rankings by subject category and compare journals within the same field.

And here’s a great scholarly article with useful references that provide information on how to identify and avoid submitting to predatory journals.

What makes a good impact factor boils down to the field of research and the host of arbiters of “good.” Highly reputable journals may have low impact factors not because they lack credibility, but because they’re in specialized/niche fields with low citations.

The interpretation of what is a “good” journal impact factor varies. Possibly due to the ambitious nature of some researchers or the ignorance of others.

An IF can indeed serve as a starting point during decision-making, but, if possible, and if you don’t have to meet some arbitrary target, more emphasis should be paid to publishing high-quality research.

The prevailing mindset should be that a journal stands to benefit more from the good-quality research it publishes rather than the other way around.

Journal selection is often done at the last moment and without much thought or strategy. This article explains why this is a mistake. Here, we...

Publishing your research in an indexed journal increases the credibility and visibility of your work. Here we help you to understand journal indexing...

Learn how collaborative reading helps researchers to read faster and more productively. Collaborative reading can help researchers network and...

Be the first to know about new B2B SaaS Marketing insights to build or refine your marketing function with the tools and knowledge of today’s industry.